Total immersion: Wētā Digital’s VFX toolset on Avatar: The Way of Water

Several years after we looked at the VFX of Avatar, we’re returning to the world of Pandora, with a deep dive into the technology behind Avatar: The Way of Water, specifically the 2,225 water shots that had to be rendered for James Cameron’s latest blockbuster.

This was created using a water simulation system from Wētā Digital which has just won the Emerging Technology Award at the 2023 Visual Effects Society (VES) Awards. It recognises the team including Alexey Dmitrievich Stomakhin, Steve Lesser, Joel Wretborn, and Douglas McHale (WētāFX),who developed a cutting-edge water toolset that revolutionises how water is brought to life on the big screen.

Fired By Design recently had the chance to chat to Alexey Stomakhin, Principal Research Engineer at Wētā Digital who played an instrumental role in the development of the water toolset, as well as Allan Poore, SVP of Wētā Tools at Unity Technologies, who leads the team responsible for commercialising Wētā Digital’s in-house tools.

Building the toolbox

According to Stomakhin, water development on the movie started around 2017, shortly before he joined Wētā Digital (now part of Unity Technologies), moving from Walt Disney Animation Studios. The intention was to create a toolset that the Wētā artists would be able to work with out of the box.

“We basically set up a miniature show that consisted of example shots, dailies, and reference shoots, with a goal that we would use those representative shots to see if we can make our pipeline work in such a way that when we got those thousands of shots that are specific to the Avatar movies we would be prepared,” recalls Stomakhin. “We’d have the pipeline in place, and everything would be automated enough that with the onboarding of hundreds of new hires, we’d be able to train them, and they’ll be able to efficiently deliver consistent results across the board for all the different shots, no matter who works with the technology.”

“In any VFX production, there’s a tension between art direction and physical plausibility. We want to create an immersive experience, we want people to be submerged in this somewhat realistic world – or maybe it’s a fantasy world – but it needs to have some kind of consistent laws of nature integrated, so we’ll build the tools [to reflect that]. For Avatar, it is supposed to be a world with normal physical laws, more or less.

“That’s why most of the tools that we develop are physically based, and most of the numerical methods we use are very much grounded in scientific papers and previous research,” he adds.

In the physics world, Navier-Stokes equations have been used to describe fluid motion for water, but according to Stomakhin, it’s not that easy from a practical standpoint. “When you’re talking about making a movie, you must make it practical. You must make sure you can stay on budget within reasonable time limits,” he adds. “When you’re dealing with such a massive production, on scales that range from scenes of hundreds of metres all the way down to the submillimetre scale – such as little droplets on skin, you have to make a solver that’s somewhat specialised to the application that you’re working on.

That development project essentially facilitated the development of a toolset which constituted the pipeline on Avatar: The Way of Water.

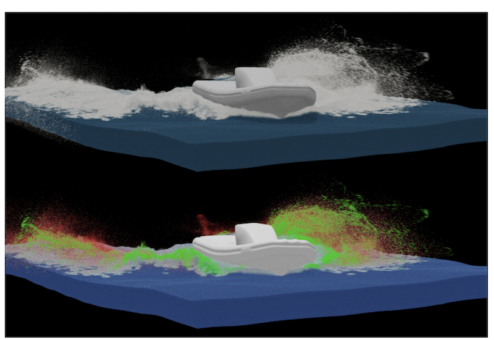

This pipeline started with the GPU procedural water solution that Wētā developed for Lightstorm Entertainment and used on stage when James Cameron deployed his virtual camera setup. “When he’s filming, he’ll see motion-captured people on his little digital screen transformed into Na’vi straightaway, but he would also see this representation of the water,” says Stomakhin. “It wouldn’t be from some random proxy geometry, it was a physically informed solution to water equations, such as Stokes or Gerstner waves. It’s a physically based approximation, but it would give those on the stage a pretty good idea of what the water would look like in the final shot, not only as far as the motion goes, but also the lighting.

“The onstage capture data was then transferred to the studio where we have a whole bunch of tools that will then be used to create a proper physically based simulation. The centrepiece of that is Loki framework that we developed internally at Wētā.”

This is a framework for physics-based simulation of various phenomena and their interactions, including hair, cloth, water, fire, and smoke.

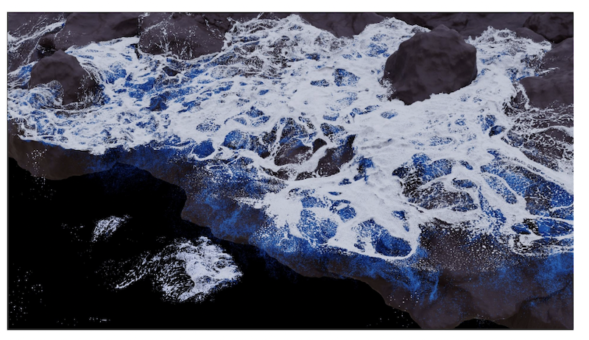

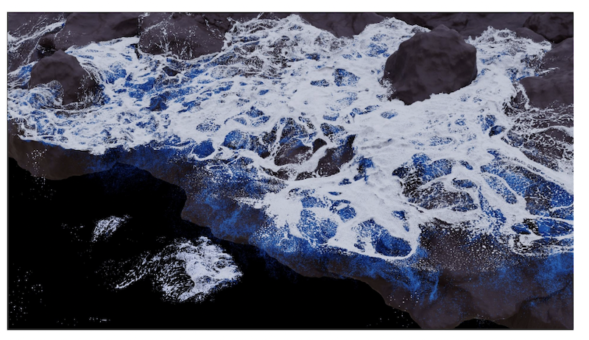

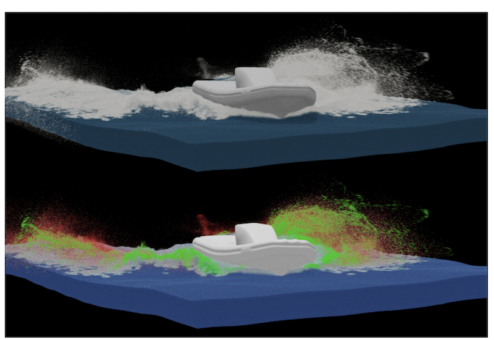

In Avatar: The Way of Water, Loki was used to create the majority of water effects, such as large splashes, underwater bubbles, foam, and high-fidelity interaction with a character’s skin.

“With Loki, the primary focus has been physics, because physics ensures scalability” says Stomakhin. “It’s basically a guarantee that no matter who uses it, they’ll get a consistent result. So imagine 100 artists working on 100 different shots, you don’t want the water to end up looking differently in each of those shots depending on their artistic preference. You have to essentially voluntarily limit yourself to like a subset of this creative canvas, if you will to ensure consistency, but then every once in a while you have to break that convention and address certain artistic notes.

This speeds up the process of delivering VFX, in particular generic shots. “For reference, Avatar has 2225 water shots, and obviously we wanted to get through them as fast as possible,” Stomakhin recalls. “If you’re talking about doing 100 generic shots with boats speeding through the water, and you just want a great result out of the box and be done with those. For those kinds of scenarios, you certainly want the process to be as automated and streamlined as possible and be and be grounded in some kind of reality – physical or not. But that’s how you achieve the consistency, getting shots done in bulk. And that’s what I think we really made a lot of progress in. But again, that’s at odds with the whole artistic aspects of some of the one-off shots.

“For example, we had this internally implemented spray system which was specifically developed to handle the airborne secondary effects,” says Stomakhin. “If you have a big crashing wave, you start with this transparent, volumetric body of water, but as it hits the rock, or a big ship, it shatters into spray. There’s an intermediate representation where the water droplets are still somewhat connected to each other, but the water is already disturbed enough that you can start seeing whiteness in the result. Then the spray would shatter into even smaller particles, or mist. So we have individual solvers for each of those phenomena, but they work in tandem, and the whole shot would completed I think the same pass. All those effects get solved at once, coupled to the surrounding air at the same time because the air is also a big component when capturing the correct dynamics.”

It can also be used under the water. “When a character dives, he initiates a disturbance in the water and creates a lot of bubbles,” says Stomakhin. “So now it’s the other way around: instead of having water in the air, you have air particles inside of the water, but the same ideas apply. You have a bunch of mini solvers that work together, coupled with the surrounding water at the same time to achieve this realistic bubble effect. So we were able to capture all kinds of physical effects because we simulated it all properly in a physically based manner.”

The team had other tools at their disposal. “For instance, we had to have a separate tool for capturing thin film on character skin. The characters would get in and out of water, so they’d constantly be wet with water rolling down the skin. We had to run a pretty expensive simulation down to the sub-millimetre resolution; each of the particles in the sim would be a fractional millimetre size which is super small, and those would be really expensive. But it was worth it because at the end of the day it gave us the realism that we were looking for,

According to Stomakhin, Loki has the capability to pretty much couple anything to anything else. “It’s a flexible framework and if even if something is not currently supported, typically it does not take a lot of effort to enable it,” he says. “Loki was designed with this idea of coupling in mind. You can run the solvers individually, but you can also run them all in a coupled manner. So you can solve for everything all at once.

“In scientific terms water in vacuum is not that interesting, but water with air is what makes all the difference for the dynamics,” he adds. “You can actually get interaction between different components without needing to worry about about setting up those individual interactions. Artists can pretty much just turn the toggle on and it happens under the hood.”